Hi, I'm Aniket Vashishtha, a second-year thesis-based master's student in Computer Science at UIUC advised by Prof. Hao Peng and Prof. Chenhao Tan. I study the cognitive gaps that limit LLMs on reasoning tasks like counterfactual simulation, using causal principles to analyze failures and design faithful evaluations. I am exploring Reinforcement Learning as an interventional learning paradigm to address these weaknesses with applications to self improvement, information seeking and scientific discovery. I am looking for PhD positions for Fall 2026.

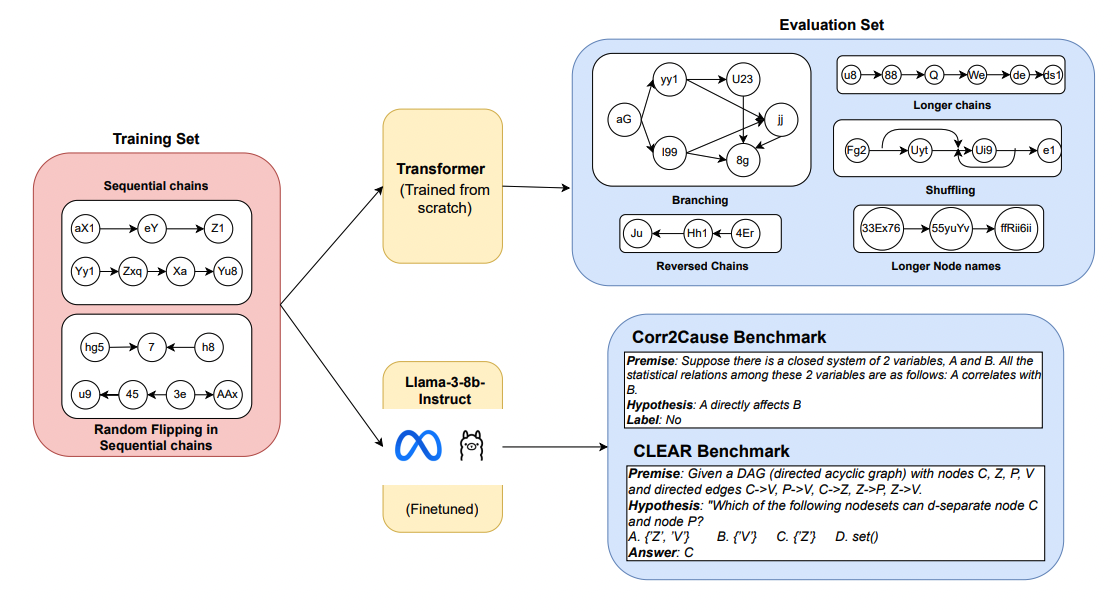

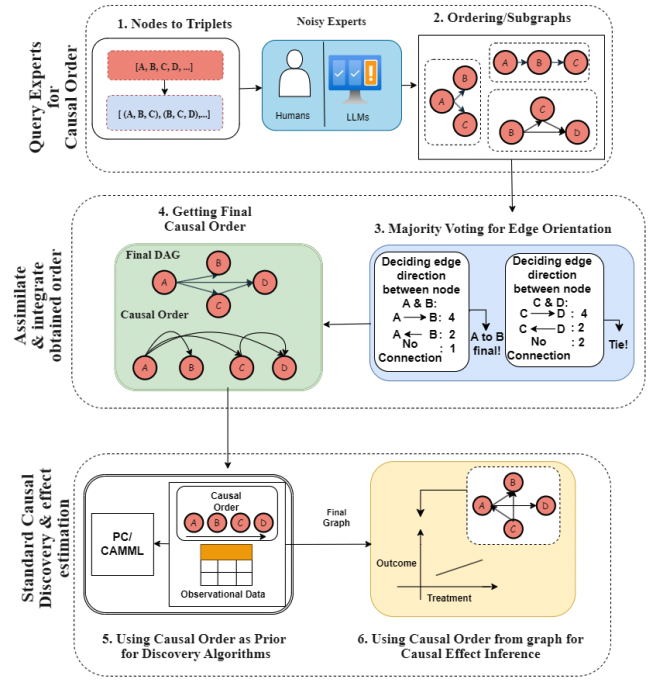

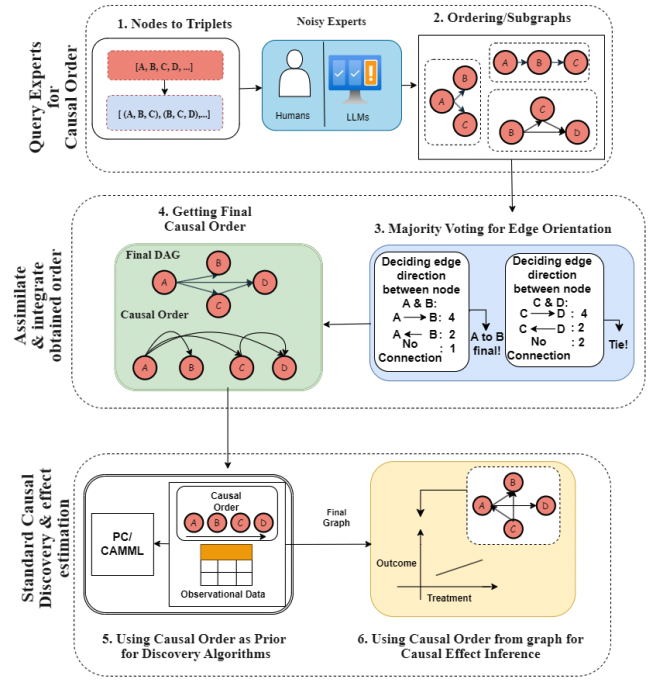

Before UIUC, I was a Predoctoral Research Fellow at Microsoft Research India, where I was fortunate to be mentored by Dr. Amit Sharma and Prof. Vineeth N. Balasubramanian. At MSR, I investigated how to leverage the domain expertise and causal reasoning capabilities of imperfect experts such as humans and LLMs for causal graph discovery and effect estimation. I designed and evaluated querying strategies along with integration methods that optimally combine expert knowledge (of LLMs and humans) with traditional causal discovery approaches. I further led the development of Axiomatic Training, a framework for improving LLMs’ causal reasoning abilities by training them on synthetic data reflecting Judea Pearl’s axioms of causality. Through this work, we showed how a small decoder transformer (about 67 million parameters) can outperform billion-parameter models like GPT-4 on applying causal rules of transitivity and d-separation, and generalize to significantly more complex causal structures than those seen during training. My work has been covered by multiple newsletters, media handles, interviews, and a podcast.

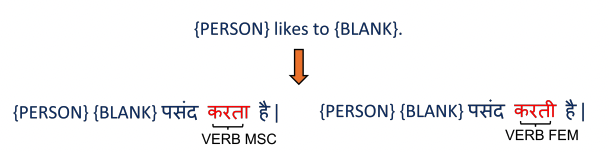

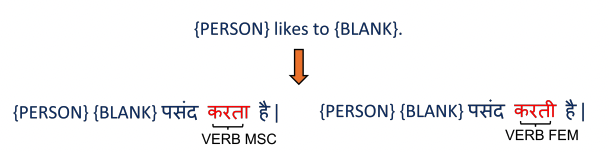

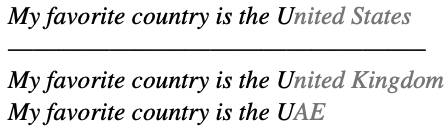

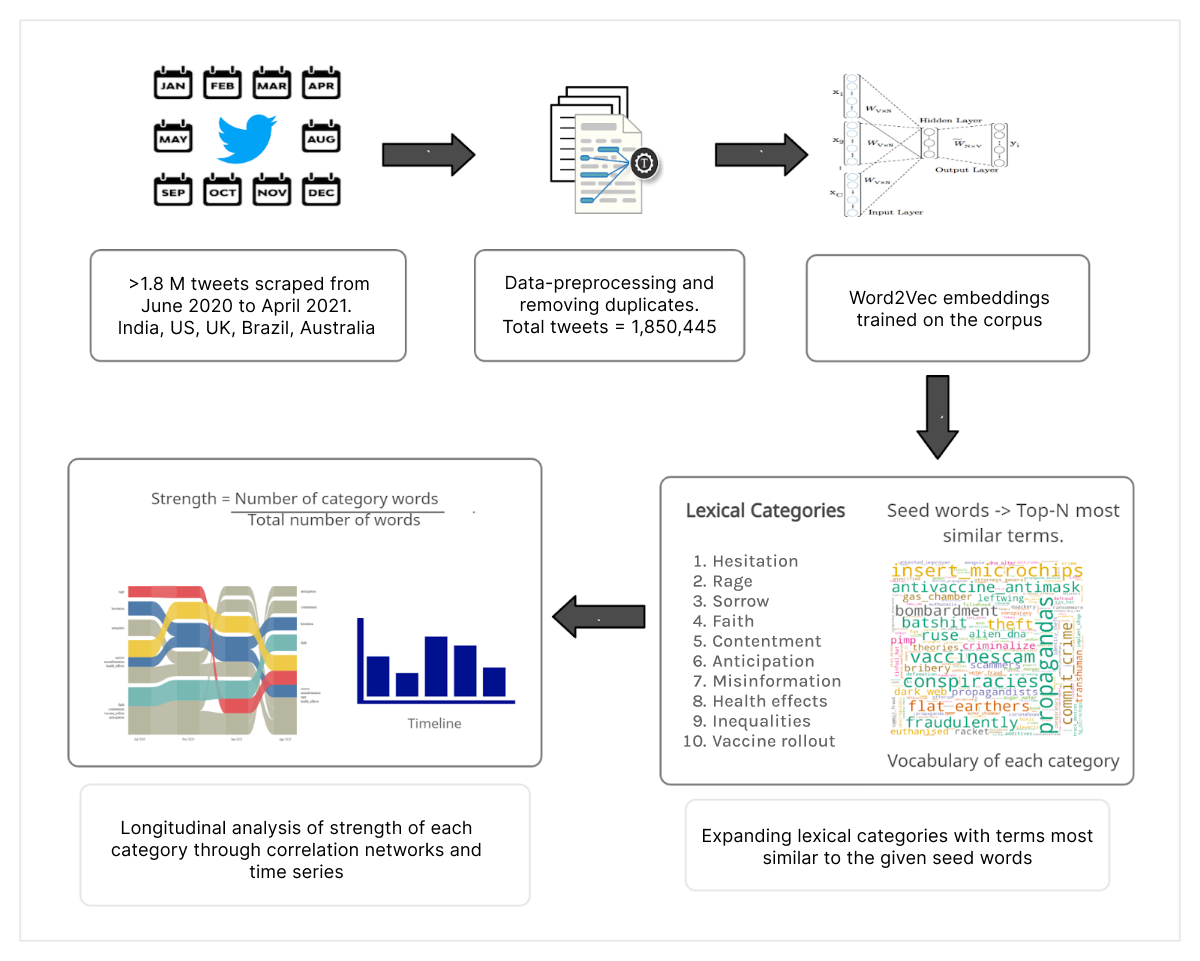

Earlier, I also led the development of projects in Fairness and Responsible AI (RAI), working with Prof. Monojit Choudhury and Dr. Sunayana Sitaram. I led the development of extensive, human-centered benchmarks for evaluating socio-cultural biases in text generation models used for autosuggestion, and for language models in multilingual settings, developed collaboratively with human annotators to extend RAI techniques to underrepresented languages, particularly in the Indian context for building robust bias mitigation strategies.

Outside of research, I've been a passionate beatboxer for over 10 years, winning several national championships. I also enjoy working out, cooking, watching anime (Vinland Saga is my current favorite), movies, and reading mythology and philosophy.

For more details about my work, check my CV or hit me up on my email.

Before UIUC, I was a Predoctoral Research Fellow at Microsoft Research India, where I was fortunate to be mentored by Dr. Amit Sharma and Prof. Vineeth N. Balasubramanian. At MSR, I investigated how to leverage the domain expertise and causal reasoning capabilities of imperfect experts such as humans and LLMs for causal graph discovery and effect estimation. I designed and evaluated querying strategies along with integration methods that optimally combine expert knowledge (of LLMs and humans) with traditional causal discovery approaches. I further led the development of Axiomatic Training, a framework for improving LLMs’ causal reasoning abilities by training them on synthetic data reflecting Judea Pearl’s axioms of causality. Through this work, we showed how a small decoder transformer (about 67 million parameters) can outperform billion-parameter models like GPT-4 on applying causal rules of transitivity and d-separation, and generalize to significantly more complex causal structures than those seen during training. My work has been covered by multiple newsletters, media handles, interviews, and a podcast.

Earlier, I also led the development of projects in Fairness and Responsible AI (RAI), working with Prof. Monojit Choudhury and Dr. Sunayana Sitaram. I led the development of extensive, human-centered benchmarks for evaluating socio-cultural biases in text generation models used for autosuggestion, and for language models in multilingual settings, developed collaboratively with human annotators to extend RAI techniques to underrepresented languages, particularly in the Indian context for building robust bias mitigation strategies.

Outside of research, I've been a passionate beatboxer for over 10 years, winning several national championships. I also enjoy working out, cooking, watching anime (Vinland Saga is my current favorite), movies, and reading mythology and philosophy.

For more details about my work, check my CV or hit me up on my email.

Updates

| Dec 2025: | Awarded $5,000 Tinker Research Grant from Thinking Machines Lab to support my research on causality and LLMs. |

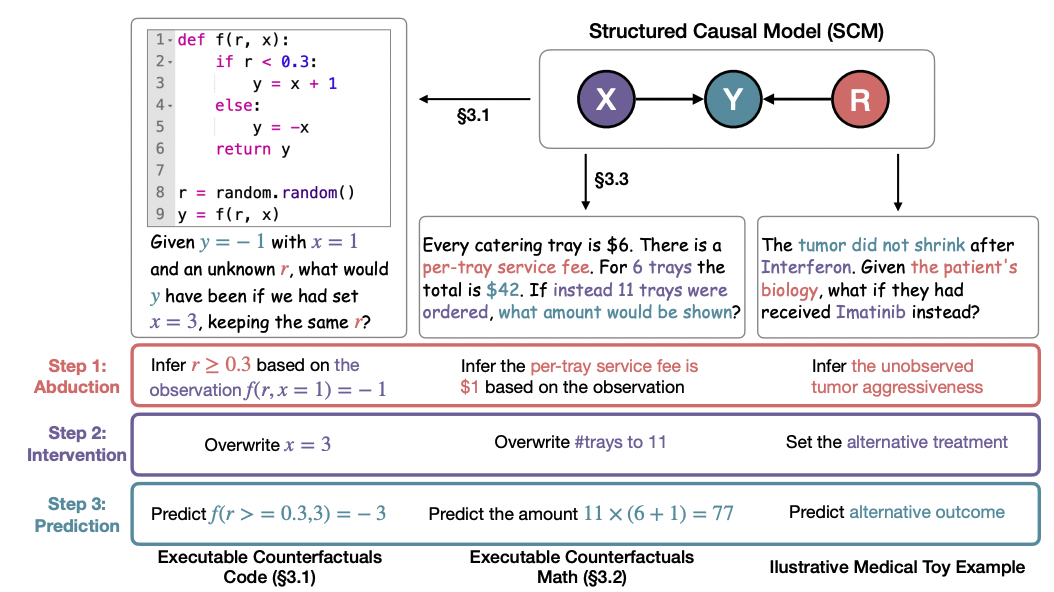

| Dec 2025: | Presented our work on Executable Counterfactuals at FoRLM workshop at NeurIPS 2025 in San Diego! |

| Dec 2025: | Invited to give a talk about my research on Causality and LLMs at the Statistics Seminar, Indiana University Indianapolis. |

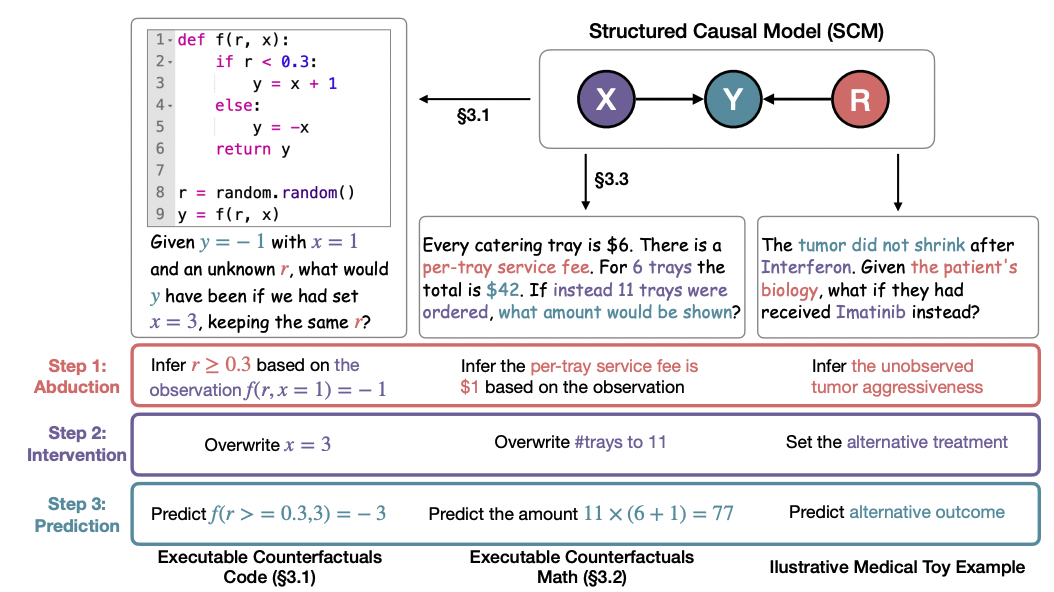

| Sept 2025: | Our paper on evaluating counterfactual reasoning in LLMs through code-based frameworks and inducing the required cognitive skills for it via Reinforcement Learning released on arXiv and accepted at FoRLM workshop at NeurIPS 2025! |

| Sep 2025: | Started working with Prof. Jiawei Han as a Research Assistant, on building RAG pipelines for extracting causal graphs from LLMs for improved multi-hop reasoning. |

| Sep 2025: | My interview with Neptune.ai on my work on Axiomatic Training got published (Interview Post). |

| Jul 2025: | Presenting my work Teaching Transformers Causal Reasoning through Axiomatic Framework at ICML'25 main conference. |

| Apr 2025: | Presenting my work Causal Order: Leveraging Imperfect Experts for Causal Inference at ICLR'25 main conference. |

| Apr 2025: | I was invited to give a talk on my work on LLMs and Causality at CISPA, Germany. |

| Apr 2025: | Dr. Amit Sharma discusses our work on Causal Axiomatic Training on Aleksander Molak's Podcast. |

| Jan 2025: | Our paper Causal Order: Leveraging Imperfect Experts for Causal Inference accepted at ICLR'25. |

| Dec 2024: | Dr. Amit Sharma delivered an oral presentation on our work leveraging LLMs' domain expertise for causal discovery at Causality and Language Models workshop at NeurIPS 2024 . |

| Nov 2024: | Gave a talk at Causal Data Science Summit on our work Teaching Transformers Causal Reasoning through Axiomatic Framework, alongside leading researchers from causality, economics, and language models. |

| Oct 2024: | Our work Causal Order: Leveraging Imperfect Experts for Causal Inference accepted as an Oral paper at CaLM workshop at NeurIPS 2024. |

| Sep 2024: | I was awarded the prestigious JN Tata Scholarship (12,000 USD) to support my graudate studies at UIUC |

| Aug 2024: | Joined UIUC as a Thesis Masters student in Computer Science, and started working with Prof. Hao Peng. |

| Jul 2024: | Released our work Teaching Transformers Causal Reasoning through Axiomatic Training on arXiv. Work was well-received online (tweet) and covered by tech accounts (coverage). |

| Feb 2024: | Gave an oral talk about our work on utilising LLMs as imperfect experts for causal inference, in-person at AAAI LLM-CP workshop in Vancouver, Canada. |

| Sep 2023: | Invited to lead a 2-day national workshop in St. Dominic's College, Kottayam, Kerala. The aim of the workshop was to introduce students and faculty from non computer science backgrounds to LLMs, their capabilities, and limitations. We also introduced them to the strategic integration of LLMs in education and how to prevent misuse. |

| May 2023: | Our paper Evaluating Gender Biases in Multilingual Settings accepted at ACL'23 Findings. |

| Apr 2023: | Started working with Dr. Amit Sharma and Prof. Vineeth N. Balasubramanian as a Predoctoral Research Fellow at MSR India. |

| Jan 2023: | Our paper Performance and Risk Trade-offs for Multi-word Text Prediction at Scale accepted at EACL 2023 Findings. |

| Oct 2022: | Our team won first place at Microsoft Turing Hackathon! We implemented the Inclusivity Toolkit to diagnose the biases of language models across various dimensions by bringing together numerous bias detection methods from the literature. |

| Jan 2022: | Started interning in the NLP team at Microsoft Research India with Dr. Monojit Choudhury and Dr. Sunayana Sitaram, focusing on Responsible AI problems. |

Publications

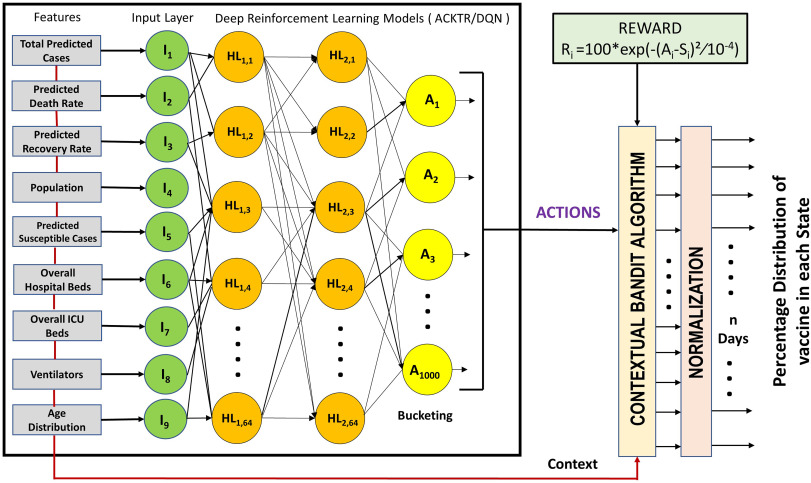

Executable Counterfactuals: Improving LLMs' Causal Reasoning Through Code

, Qirun Dai*, Hongyuan Mei, Amit Sharma, Chenhao Tan, Hao Peng (* = Equal Contribution)

FoRLM @ NeurIPS'25 | Foundations of Reasoning in Language Models Workshop at Neurips'25

pdf abstract

, Qirun Dai*, Hongyuan Mei, Amit Sharma, Chenhao Tan, Hao Peng (* = Equal Contribution)

FoRLM @ NeurIPS'25 | Foundations of Reasoning in Language Models Workshop at Neurips'25

pdf abstract

Causal Order: Leveraging Imperfect Experts for Causal Inference

, Abbavaram Gowtham Reddy, Abhinav Kumar, Saketh Bachu, Vineeth N Balasubramanian, and Amit Sharma

ICLR'25 | International Conference on Learning Representations

Spotlight @ CaLM Workshop, NeurIPS'24 | Causality and Language Models Workshop

pdf abstract

, Abbavaram Gowtham Reddy, Abhinav Kumar, Saketh Bachu, Vineeth N Balasubramanian, and Amit Sharma

ICLR'25 | International Conference on Learning Representations

Spotlight @ CaLM Workshop, NeurIPS'24 | Causality and Language Models Workshop

pdf abstract

On Evaluating and Mitigating Gender Biases in Multilingual Settings

, Kabir Ahuja*, and Sunayana Sitaram (* = Equal Contribution)

ACL'23 Findings | Annual Conference of the Association for Computational Linguistics

pdf abstract

, Kabir Ahuja*, and Sunayana Sitaram (* = Equal Contribution)

ACL'23 Findings | Annual Conference of the Association for Computational Linguistics

pdf abstract

Performance and Risk Trade-offs for Multi-word Text Prediction at Scale

, S Sai Prasad, Payal Bajaj, Vishrav Chaudhary, Kate Cook, Sandipan Dandapat, Sunayana Sitaram, Monojit Choudhury

EACL'23 Findings | European Chapter of the Association for Computational Linguistics

pdf abstract

, S Sai Prasad, Payal Bajaj, Vishrav Chaudhary, Kate Cook, Sandipan Dandapat, Sunayana Sitaram, Monojit Choudhury

EACL'23 Findings | European Chapter of the Association for Computational Linguistics

pdf abstract

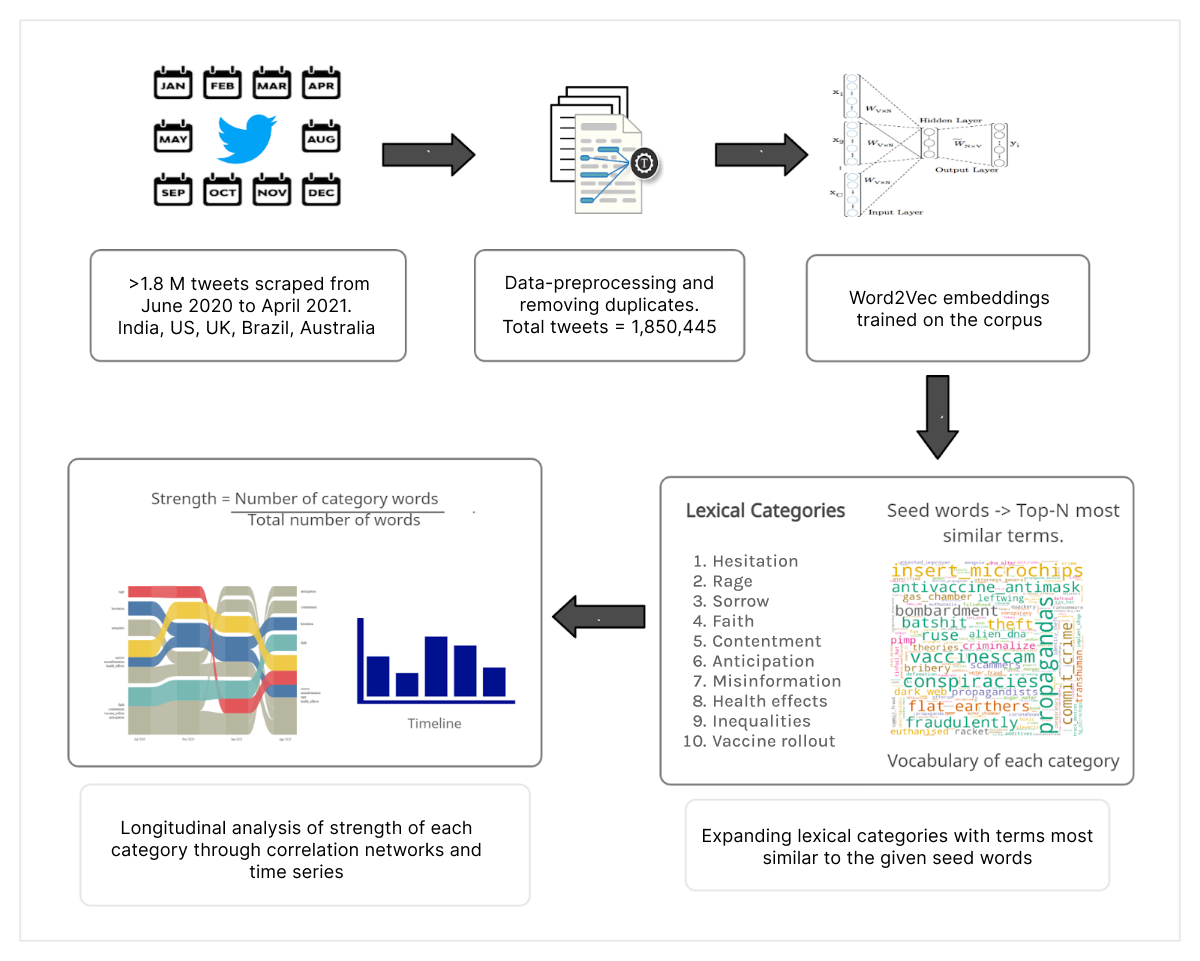

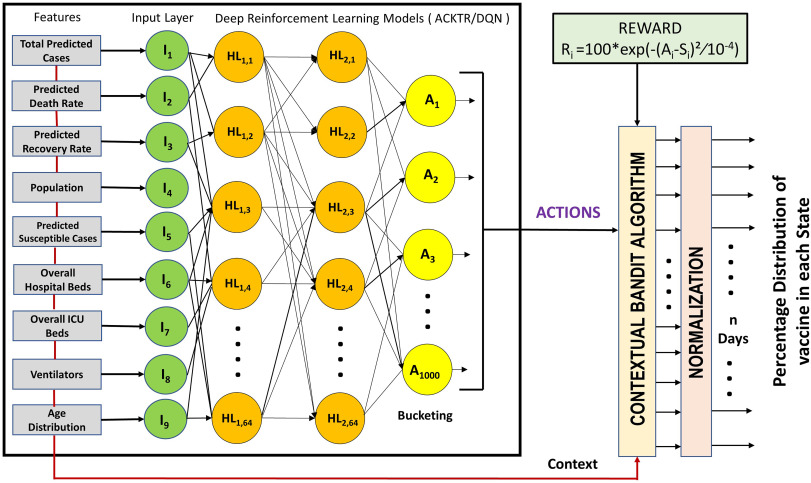

VacSIM: Learning Effective Strategies for COVID-19 Vaccine Distribution using Reinforcement Learning

Raghav Awasthi, Keerat Kaur Guliani, Saif Ahmad Khan,, Mehrab Singh Gill, Arshita Bhatt, Aditya Nagori, Aniket Gupta, Ponnurangam Kumaraguru, Tavpritesh Sethi

Intelligence-Based Medicine | Elsevier Journal

pdf abstract

Raghav Awasthi, Keerat Kaur Guliani, Saif Ahmad Khan,, Mehrab Singh Gill, Arshita Bhatt, Aditya Nagori, Aniket Gupta, Ponnurangam Kumaraguru, Tavpritesh Sethi

Intelligence-Based Medicine | Elsevier Journal

pdf abstract